Tom Monnier

Research Scientist at Meta

I am a Research Scientist at Meta working on computer vision, with an emphasis on 3D modeling and 3D generation. I did my PhD in the amazing Imagine lab at ENPC under the guidance of Mathieu Aubry. During my PhD, I was fortunate to work with Jean Ponce (Inria), Matthew Fisher (Adobe Research), Alyosha Efros and Angjoo Kanazawa (UC Berkeley). Before that, I completed my engineer's degree (=M.Sc.) at Mines Paris.

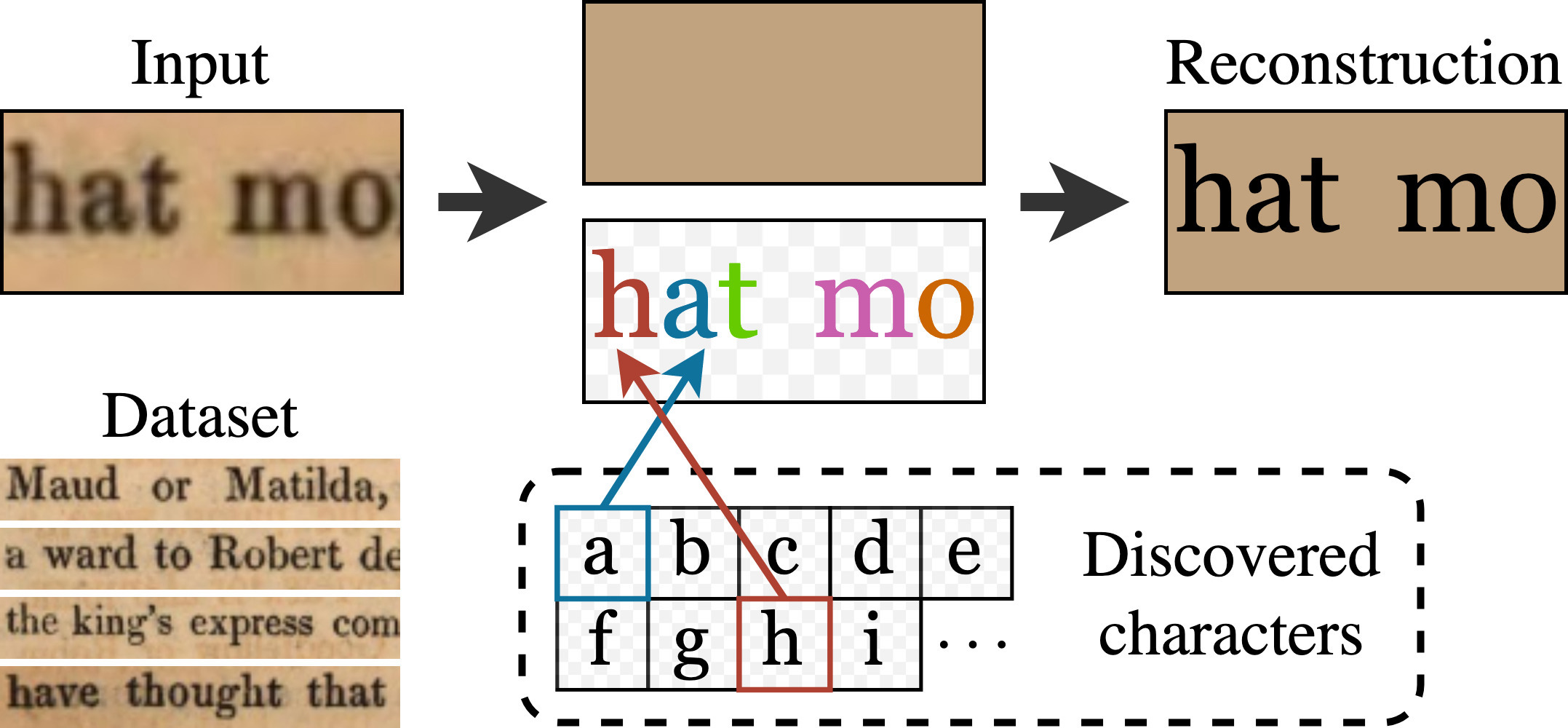

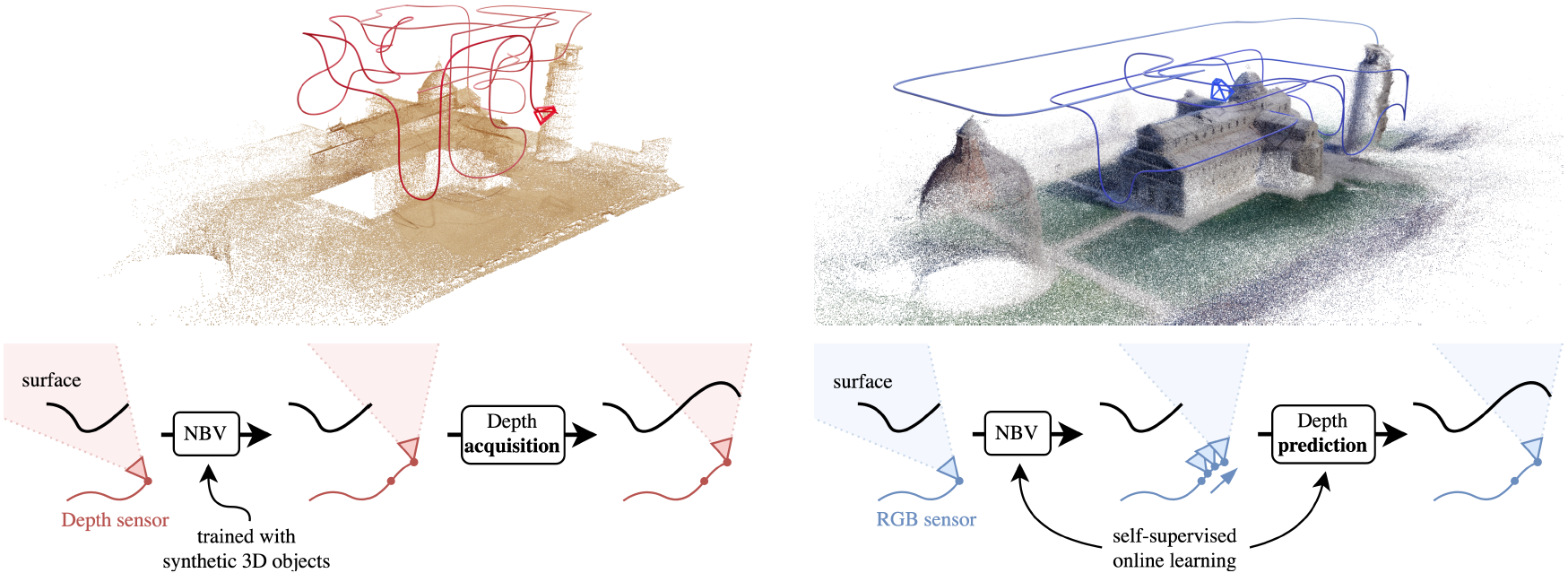

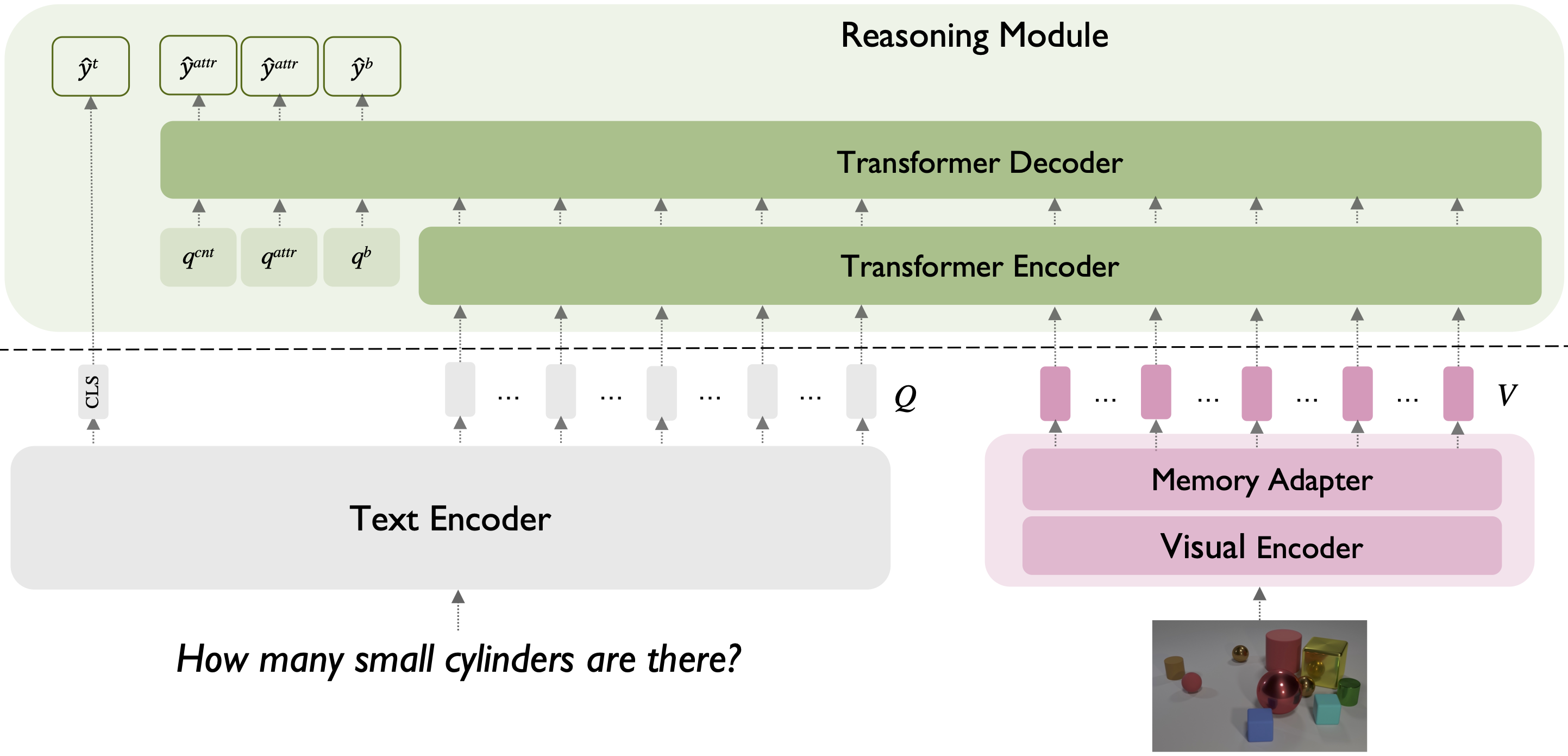

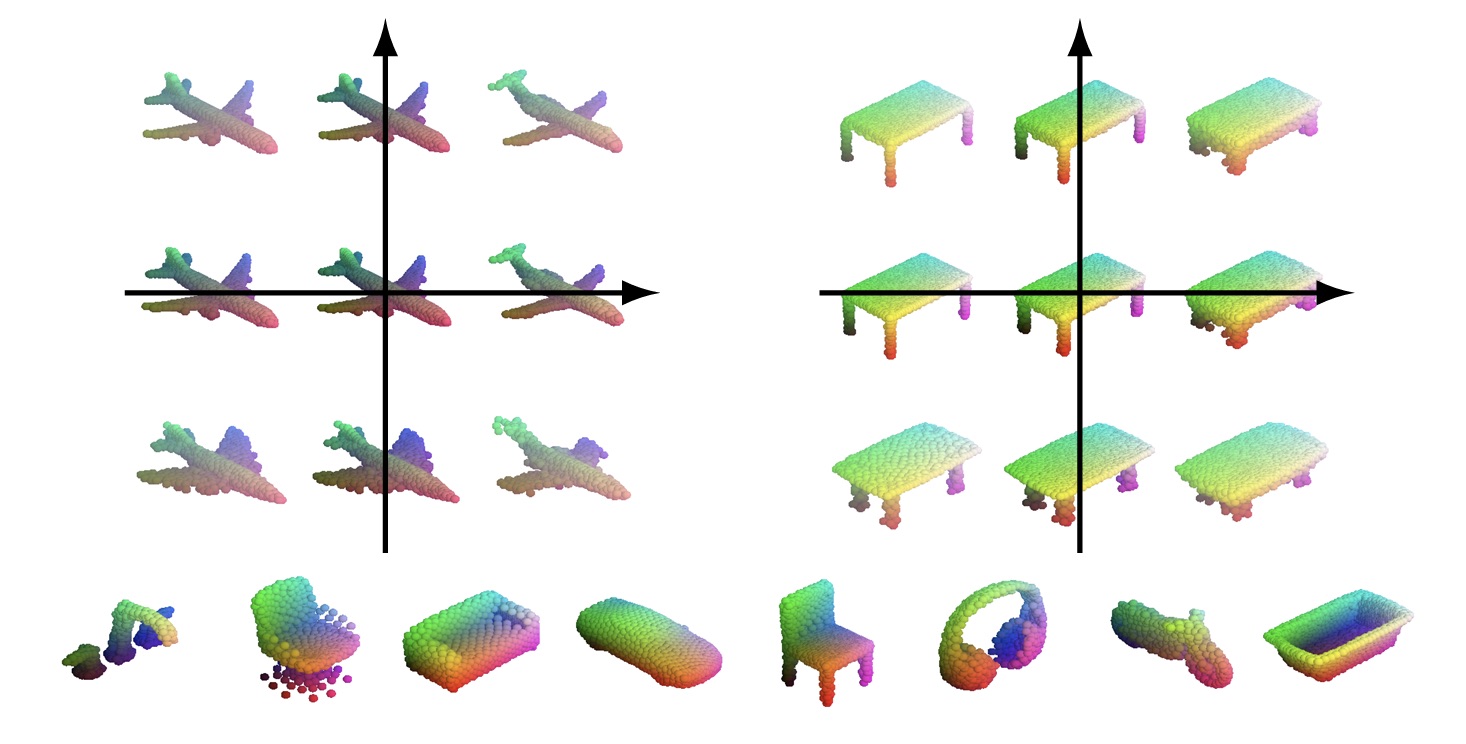

My research is focused on learning things from images without annotations, with a particular interest in recovering the underlying 3D (see representative papers). I am always looking for PhD interns, feel free to reach out!